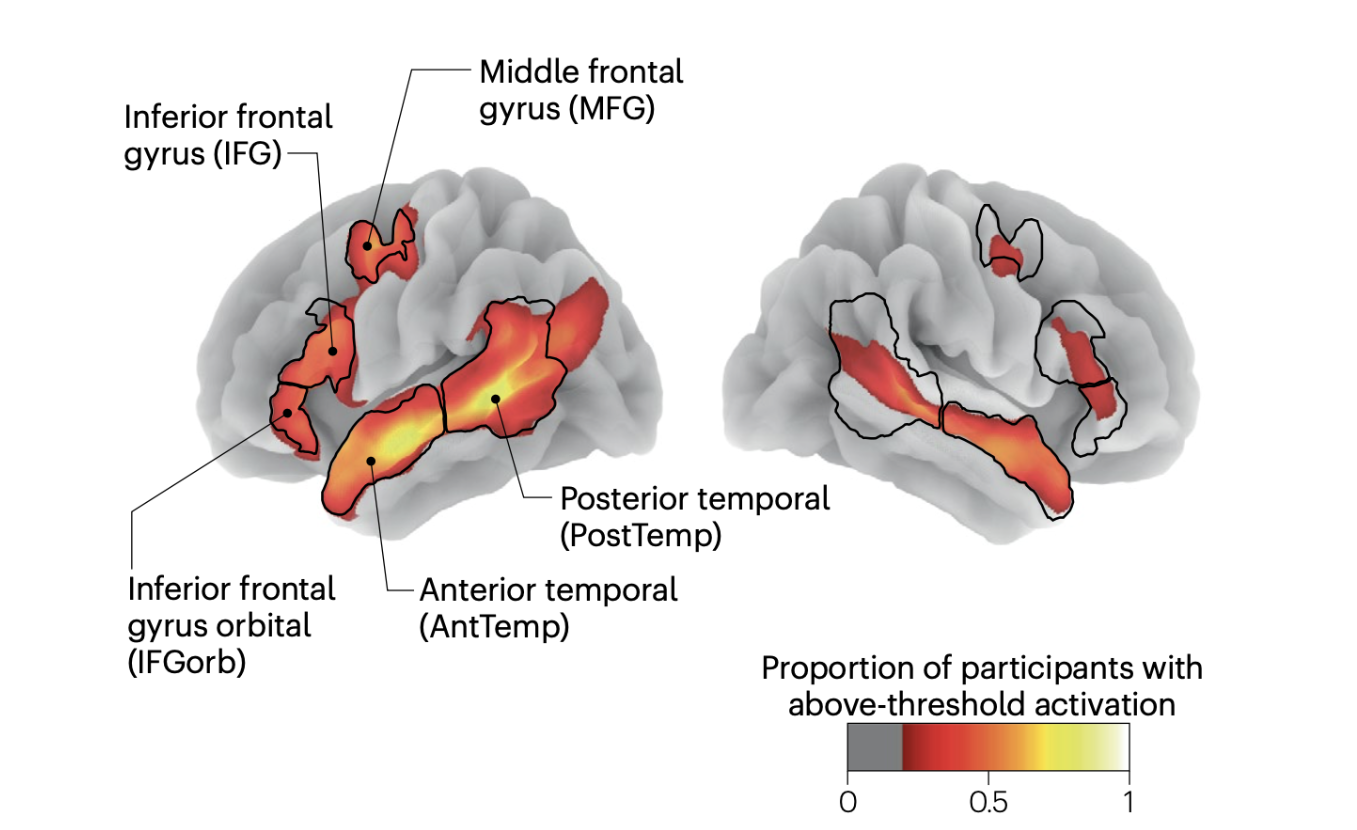

Large language models (LLMs) exhibit remarkable similarity to neural activity in the human language network. However, the key properties of language underlying this alignment---and how brain-like representations emerge and change across training---remain unclear. We here benchmark 34 training checkpoints spanning 300B tokens across 8 different model sizes to analyze how brain alignment relates to linguistic competence. Specifically, we find that brain alignment tracks the development of formal linguistic competence---i.e., knowledge of linguistic rules---more closely than functional linguistic competence. While functional competence, which involves world knowledge and reasoning, continues to develop throughout training, its relationship with brain alignment is weaker, suggesting that the human language network primarily encodes formal linguistic structure rather than broader cognitive functions. Notably, we find that the correlation between next-word prediction, behavioral alignment, and brain alignment fades once models surpass human language proficiency. We further show that model size is not a reliable predictor of brain alignment when controlling for the number of features. Finally, using the largest set of rigorous neural language benchmarks to date, we show that language brain alignment benchmarks remain unsaturated, highlighting opportunities for improving future models. Taken together, our findings suggest that the human language network is best modeled by formal, rather than functional, aspects of language.

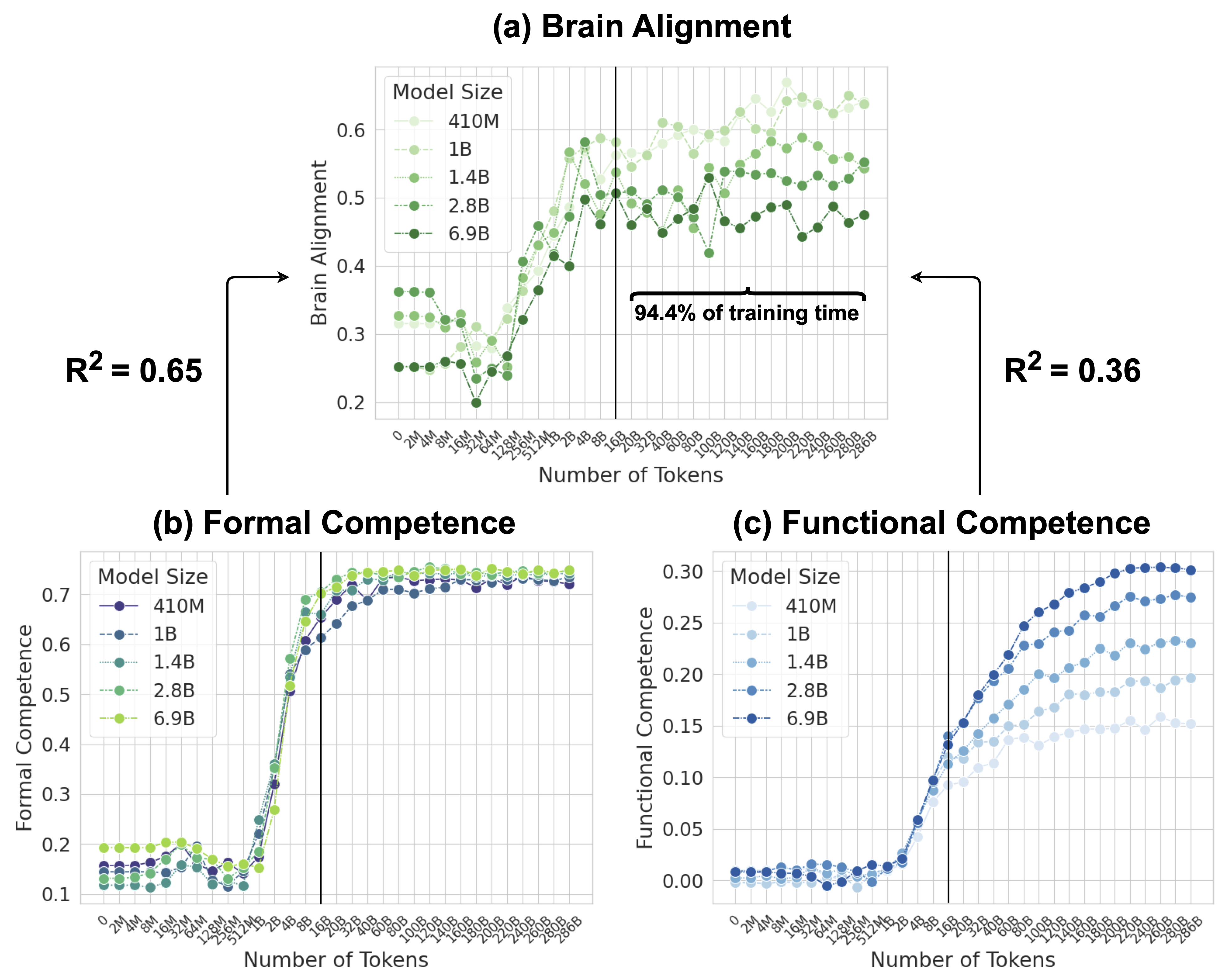

Evaluating Brain Alignment with Linear Predictivity and No Contextualization is Most Stringent (a) Average brain alignment across 8 Pythia models under three conditions: (1) a pretrained model processing the original stimuli, (2) a pretrained model processing \emph{random} sequences of the same length (averaged over five random seeds) as a control condition, and (3) the model with untrained parameters processing the original stimuli. The linear predictivity metric differentiates between meaningful and random stimuli most strongly, while RSA and CKA overestimate alignment. (b) Brain alignment on the Pereira2018 dataset under two cross-validation schemes: with contextualization (random sentence split) and without contextualization (story-based split).

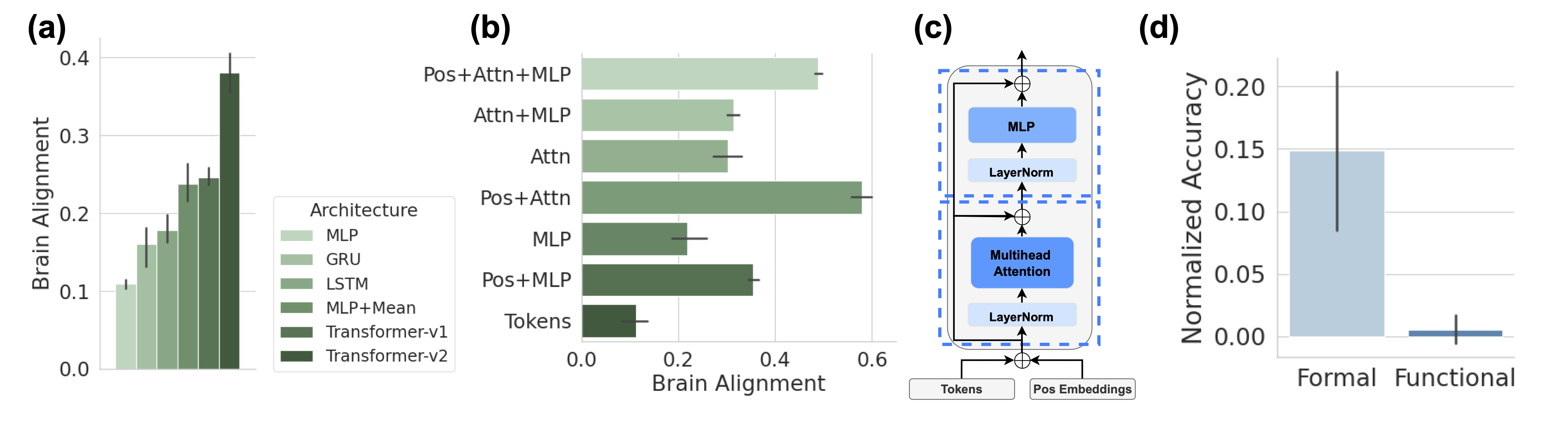

Context Integration drives Brain Alignment of Untrained Models (a) Sequence-based models (GRU, LSTM, Transformers, and mean pooling) achieve higher brain alignment than models that rely solely on the last token representation (Linear, MLP), highlighting the importance of temporal integration. Error bars report five random initializations in all subplots. (b) Ablation study of architectural components in a single untrained Transformer-v2 block, demonstrating that attention mechanisms combined with positional encoding yield the highest brain alignment. (c) Diagram of the Transformer block architecture used in (b), with components grouped into attention (lower box) and MLP (upper box). (d) The average performance of five Pythia models with untrained parameters on formal and functional linguistic competence benchmarks, showing that formal competence exceeds chance level even in untrained parameter models.

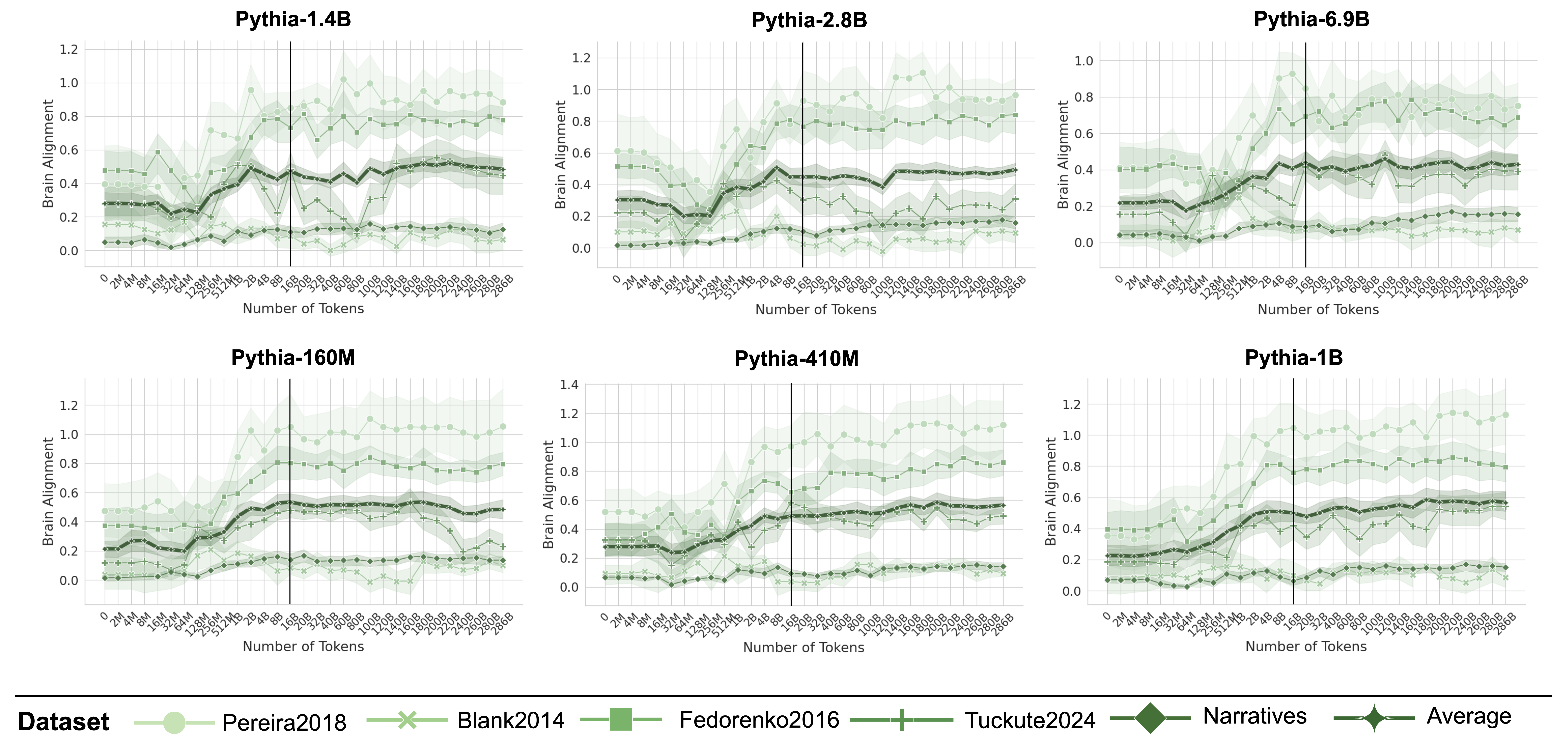

Brain Alignment Saturates Early on in Training. Plots indicate the brain alignment scores of three models from the Pythia model suite with varying sizes (log x-axis up to 16B tokens, uneven spacing after black line). Scores are normalized by their cross-subject consistency scores. Alignment quickly peaks around 2–8B tokens before saturating or declining, regardless of model size.

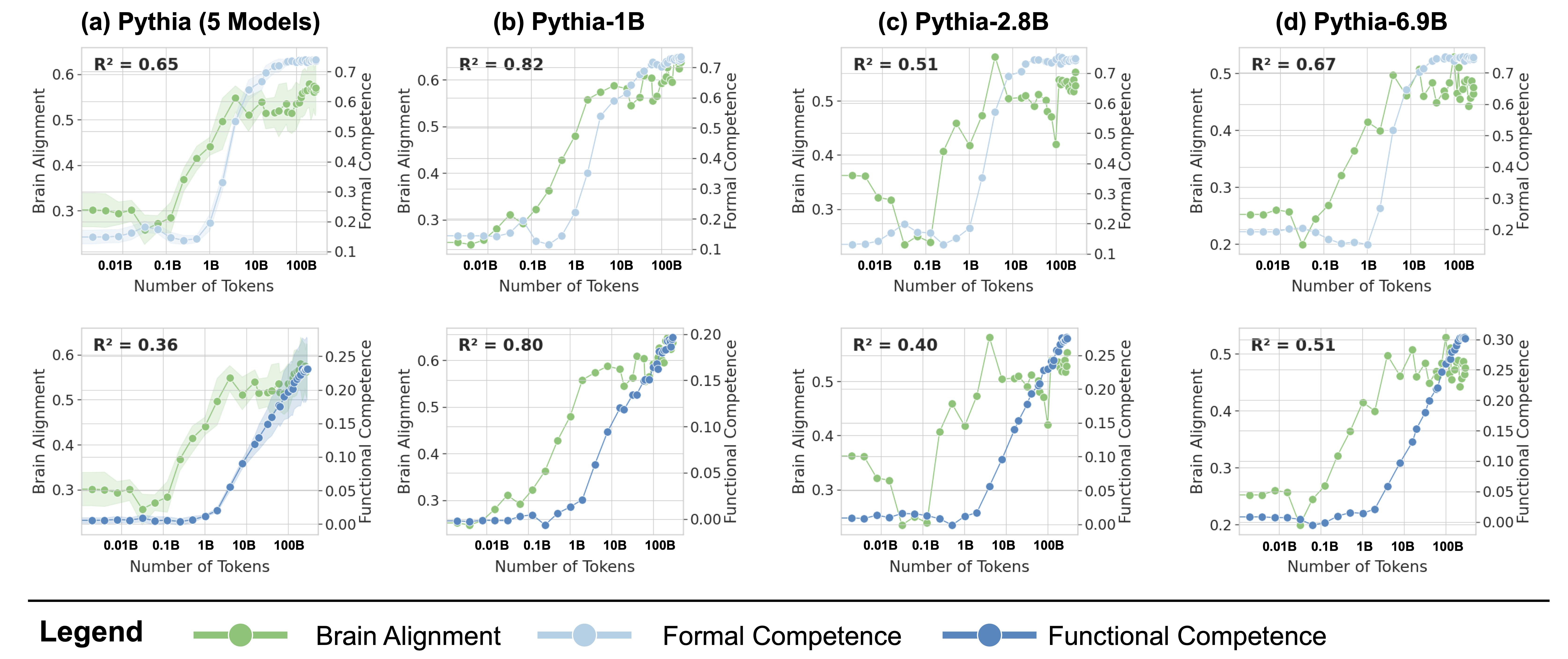

Formal Competence Tracks Brain Alignment More Closely Than Functional Competence. Each column compares how the evolution of formal competence (top) and functional competence (bottom) tracks the evolution of brain alignment during training. The R2 values quantify the strength of this relationship, with higher values in formal competence suggesting it as the key driver of the observed brain alignment. (a): The data averaged across models of five different sizes. (b-d): the same comparison as in (a), but with comparisons were made for models from the Pythia suite with three different sizes.

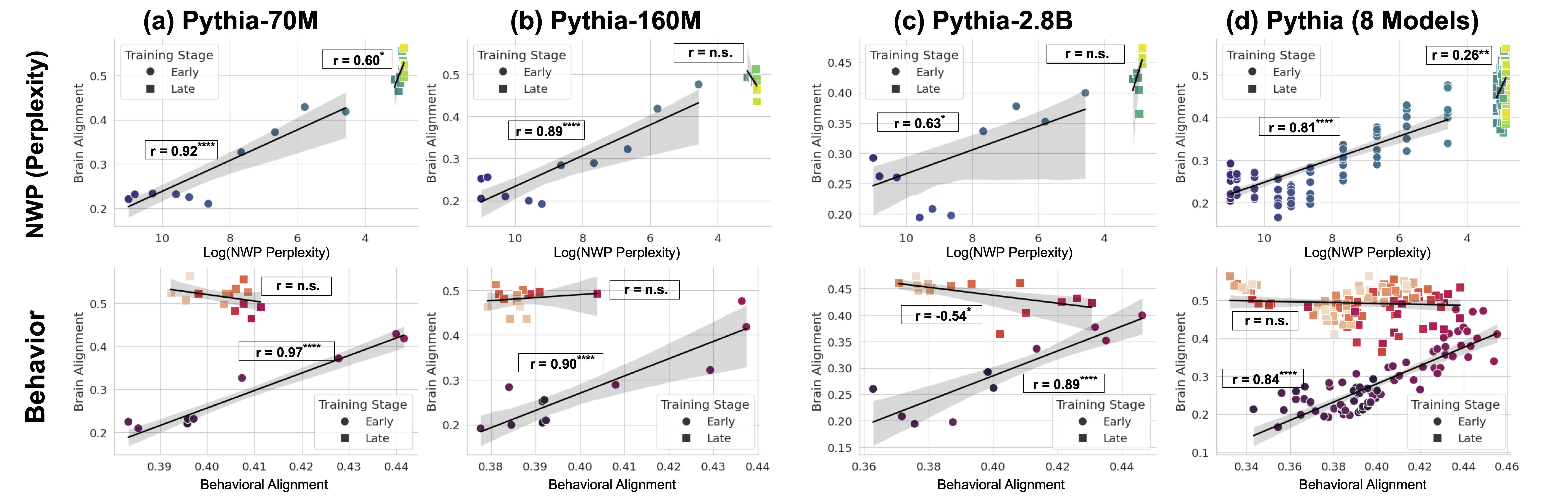

NWP and Behavioral Alignment Correlate with Brain Alignment Only in Early Training. (Top Row): Correlation between brain alignment and language modeling loss shows a strong, significant relationship during early training (up to 2B tokens). While this correlation weakens in later stages (up to ~300B tokens). Results are shown for three models and the average of all 8 models (last column). (Bottom Row): The same analysis, but for the correlation between brain alignment and behavioral alignment, revealing a similar trend—strong correlation early in training, but no significant relationship as models surpass human proficiency.

Individual Benchmark Scores for Formal and Functional Competence. (a-c): each column shows the evolution of individual benchmark scores for formal competence (top) and functional competence (bottom) during training. Data is presented for Pythia models of three different sizes. (d): the same as (a-c), with data averaged across models of five different sizes.

@inproceedings{alkhamissi-etal-2025-language-to-cognition,

title = "From Language to Cognition: How {LLM}s Outgrow the Human Language Network",

author = "AlKhamissi, Badr and

Tuckute, Greta and

Tang, Yingtian and

Binhuraib, Taha Osama A and

Bosselut, Antoine and

Schrimpf, Martin",

editor = "Christodoulopoulos, Christos and

Chakraborty, Tanmoy and

Rose, Carolyn and

Peng, Violet",

booktitle = "Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2025",

address = "Suzhou, China",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2025.emnlp-main.1237/",

pages = "24332--24350",

ISBN = "979-8-89176-332-6",

}

This website is adapted from LLaVA-VL licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.